This past week, I had the opportunity to write an app to handle scanning in patients for CoViD testing in our city, Fishers, Indiana. Fishers is one of the top 10 places to raise a family in the United States. Reinforcing that reputation, the city is providing free CoViD testing to all 90,000 residents, free of charge. The process is simple:

- Register online via an assessment

- Receive a patient ID

- Scan in at appointment, assign test, and take test

- Receive answers within 3 business days

Seems simple, right? Our city isn’t using the painful “touch the back of your eyeball” technique, either. Instead, it’s at the front of the nasal cavity – simple, painless, and you get results. It also ensures contact tracing where there are infections, so as a community we can help prevent the spread of disease.

But can we do it?

The problem is, roughly a week ago, Step 3 didn’t exist. The patient ID was from a survey with a QR code. The kit didn’t have any identifier whatsoever – it’s just one of many bagged kits in a box. And this data was being manually entered by other entities running similar tests. To give you an idea of how prone to error this process is, consider the patient ID looks like this:

Any chance for typos much? And that doesn’t solve the kit identifier problem – because there isn’t one.

Figure: A box of test kits.

Solving the Problem

So, last Saturday, I received an email from our City’s IT Director. He wanted to know if I knew anyone who could marry the patients’ ID with the kit ID for proper tracking. If you know me, this type of project is right up my alley. This project screamed MOBILE APP! I said “I’ll do it!” It would be like a weekend hackathon!

Most cities aren’t offering CoViD testing, and not a single state is offering such a service to all residents. Fishers is different – we’re a “vibrant, entrepreneurial city,” as our awesome forward-thinking mayor, Scott Fadness, often exclaims. His team takes novel approaches to addressing community needs, and this was no different.

Where there is testing, as I understand it, it’s with PCs and handheld scanners. What a nightmare it must be to keep such a setup running – with a laptop, with software that’s manually installed, and patients scanned with a handheld that can’t scan through glass. I’ve worked with those setups before – the technology issues are a huge PITA. Let alone deploying updates in any timely fashion!

We decided at the get-go a mobile app would be the right approach. When asked about barcode scanners, I explained “We don’t need one.” The built-in cameras on modern day cell phones are more than capable of scanning any type of barcode, QR code, and so forth. As an added bonus, any cell phone we use will have Internet connectivity, with Wi-Fi as a backup. The beauty of it is one single device, one single app, everything self-contained and easy to use.

The beauty of it is one single device, one single app, everything self-contained and easy to use.

The Requirements

After our initial discussion, and a bit of back and forth, this was the decided-upon workflow:

- Scan the Patient QR Code and Kit ID.

- Come up with a Kit Scanning Process. We decided on CODE39 barcodes that would be printed beforehand so technology wouldn’t be an issue each day.

- Store the Patient ID and Kit ID for later retrieval. This ended up being “uploaded” to the survey itself, ensuring we didn’t need to store any PII, and didn’t have to build a back-end data store. Small favors…

And this was the mockup:

Figure: Whiteboarded app.

Figure: Rough brain dump with ideas.

Initially, we talked about generating the Kit barcode on the mobile device, then printing it to a wireless printer in the testing bay. This certainly seemed possible. However, the more I thought about it, the more I realized we could simply pre-print the labels and affix them as needed. This would provide some obvious benefits:

- We wouldn’t have to come up with a mobile printing solution, which can be tricky, and is not a simple problem to solve cross-platform.

- We’d keep a printer breakdown out of the picture, ensuring technology didn’t “get in our way”

The key is to get the patients in, tested, and out as efficiently as possible. The simpler we kept the process, the less could go wrong. So, on-demand printing was eliminated, and we’d simply pre-print labels instead. They’d be affixed to the test kit and then assigned to a patient.

Common Needs of Mobile Apps

Determining the app dvelopment approach, I took into consideration every mobile app I’ve built generally has had three primary needs that must be addressed:

- Where does data come from? Usually this is an API or the user. If an API doesn’t exist, considerable time is necessary to build one.

- Where does data go? Also usually an API for some data store. Same API issue.

- How does the user interact with data? The app is useless if the user can’t figure it out and, if possible, enjoy the experience. This can have design cost and time impacts.

For Need 1, we knew we had a QR Code. BUT how would we know it’s valid? How would we get the patient data? Well, it just so happened the survey provider had an API. Sweet! We hopped on a call with them and they provided an API key and documentation. That’s how API access should work! They even provided a RegEx to validate the scanned patient ID, which internally to then was actually just a survey ID.

What about the kits? We decided to use a CODE39 barcode font and print on standard Avery labels. We came up with a standard naming and numbering convention, a RegEx to validate, and would pre-print them – a few hundred per day. This would ensure the labels were verifiable after scanning, and that printing wouldn’t be an issue each day. We’d take care of technology problems beforehand – such as printer issues – so they wouldn’t impact patient processing.

Figure: A combination of Excel to generate the labels following the naming convention, plus mail merge to insert into on the off-the-shelf labels.

OK, now for Need 2… We can get the data, but we have to store it somewhere. Initially, we thought about building a separate back-end. The survey provider, Qualtrics, explained we could send the data back to their system and store it with the initial survey. Well, that was much better! No new storage API development was needed, as they already had the infrastructure in place. Building a new, solid, secure API in a short period of time would have been no small task.

For Need 3, the user experience, I borrowed my grandfather’s phrase: It must require a PH, D. Push Here, Dummy. I wanted “three taps and a send,” as follows:

- Scan the Patient – Once scanned, look the user up and verify they exist, showing confirmation details on the screen.

- Scan the Kit – Ensure the barcode matches the expected format.

- Confirm & Submit – Prompt to ensure patient details, such as name and postal code, have been verified, then confirm the entry has been saved.

It must require a PH, D. Push Here, Dummy.

That’s it – no chance for typos, and verification at every step, helping things go right. Little animations would show when a step had been completed, and scans could be done in any order.

Figure: Texting back and forth, getting our bases covered.

Xamarin As The Dev Stack

We’re building a mobile app here, and we may need it for multiple platforms. iOS – both iPhone and iPad, Android, and perhaps even Windows. Building each platform separately would take a lot of time to complete – time we didn’t have. It was April 25, we needed to be testing by April 27, and we were going live May 1.

The right choice was Xamarin with Xamarin.Forms – Microsoft’s cross-platform mobile framework. It’s similar to React Native, but you have full access to the underlying platform APIs. That’s because you’re building a real native app, not an interpreted overlay. With a single solution, we could build the iOS, Android, and UWP (Windows) apps, with 90% or more code sharing. I’m a Xamarin certified mobile developer, so this was going to be fun!

Figure: The Xamarin app in Visual Studio.

First Draft

Within a few hours, I had an alpha version of the app running. It was rough, and didn’t have the best UI, but it was scanning and talking with the Qualtrics API. Hey, once the base stuff’s working, you can make it look pretty!

The app consisted of a few core components:

- App Service – Managing any processes the app needed completed, such as retrieving patient survey details, updating a patient survey record, verifying scanned code formatting, and so forth.

- API Service – Talking back and forth with the Qualtrics API.

- Analytics Service – Tracking aspects of the application, such as kit scan successes and failures, any exceptions that may occur, and so forth, so we can improve the app over time.

Figure: Build 1 of the app. I first tested with my Android device, then rolled out to iOS, testing on both an iPhone and iPad.

I also had to ensure scanning went off without a hitch. After all, that’s what this app is doing – getting all the data quickly, then tying it together. I configured the scanning solution to only scan QR codes when scanning the patient ID, and only CODE39 barcodes when scanning kits. That way, if the codes were next to each other, the tech wouldn’t scan the wrong item and cause confusion. Remember, the technicians are medical techs, not computer techs – any technology problem could stop the patient processing flow. We needed to ensure the technology didn’t get in the way. You do that by testing thoroughly, and keeping the end user in mind.

Figure: QR code and CODE39 barcodes for testing.

Final Approach and User Experience

Once the UI was working, I added final touches to the UX to make it friendly and easy to use:

- When a technician successfully scanned a patient, the information would appear and a green checkmark would animate in. This would clearly indicate that step was completed. If there was an issue with the verification, they would be prompted to scan again. Optionally, they could manually enter the patient ID, which would follow the same validation steps.

- When a kit was scanned, another green checkmark would animate in, signifying that step, too, was complete.

- Once both steps had been completed, the technician would clearly understand the two greens meant “good to go” and could submit the patient data. They would be prompted to confirm they had verified all patient data and everything on the screen was correct.

- Once patient data was successfully transmitted, a confirmation dialog would appear. Upon dismissal, the UI would animate to the reset state, making it clear it’s OK to proceed to the next patient.

Devices, TestFlight, and Apple, Oh My!

So the app was in a good state. How were we going to get it on devices? This isn’t an app that we want in the App Store. It’s not a general consumer app – at least, not yet. TestFlight to the rescue! We’d push it to Apple’s TestFlight app testing service, then enroll all the iOS devices. That would ensure that, as we tweaked the app, we could quickly push the updates without any messy manual installs.

For those that have deployed iOS apps before, you know this isn’t a fast process. The first version of any app into TestFlight must be reviewed by Apple. I uploaded the first version and waited…

Roughly a day later, Apple rejected the app. BUT WHY? Well, we hadn’t provided any sample QR codes or bar codes to scan, so they rejected it. UGH! Really?? I didn’t even know that was a testing requirement! You learn something new every day… So I sent a URL with some examples to test with, as you can’t upload files to the testing site, and waited. Hours later, thankfully, Apple approved the app for testing!

Figure: Apple beta review rejection email.

We enrolled the various iPhones and iPads in TestFlight and we were able to start testing. Other than a restriction with SSL over the City’s network, which was quickly resolved, we had our devices ready to go. Not bad for under 48 hours!!

Note that, once an app is in TestFlight, additional builds go through almost instantly. This ensured we could tweak as needed and not wait 24+ hours to validate each time.

Figure: We could release updates with velocity after the initial approval.

Rolling It Out – Dress Rehearsal

We wanted to make sure the app worked without a hitch. A day before release, we had a “dress rehearsal.” Everyone would be ready for the testing, and we’d introduce the app. It’s a small part, but it ties it all together. Tracy, the I.T. Director, and I had been testing in earnest prior to this time, and we were feeling pretty good about it.

That morning, I walked the users through the app, joking about the PH, D requirement. Prior to my arrival, they had been testing the process on one of our citizens, who must have been quite tired from all the work:

The pressing questions were:

- Can we scan a QR code through glass, so a citizen doesn’t have to roll down their window? Yes, unless it’s super tinted, which would be illegal anyway.

- What if we can’t scan the code? This wasn’t an issue, except for a QR code variant issue discussed later, and manual entry was supported just in case.

- What if Internet access goes down? We had cellular backup on all devices.

- How will we apply the barcode to the kit? Peel and stick, then scan. We scan after removal from the main sheet so we don’t scan the wrong code. In a later version we added a prompt when the scanned patient had already been through the process.

- What if the QR code is used more than once? This wasn’t an issue, as the name and appointment time wouldn’t match.

Here are a few photos from that morning – that was a lot of fun!

Day 1!

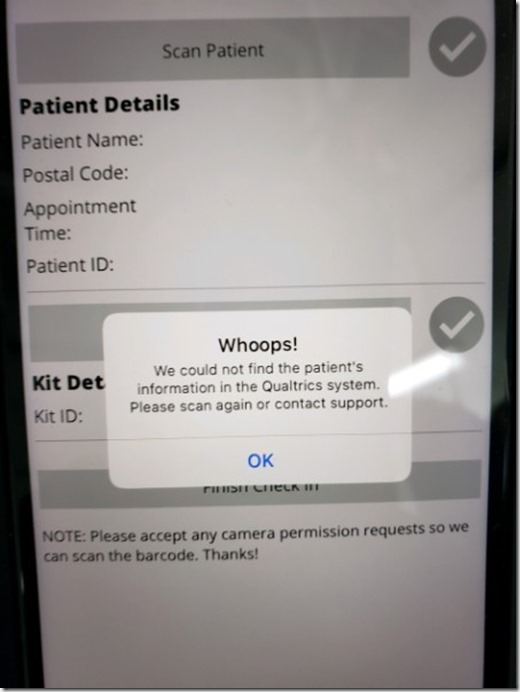

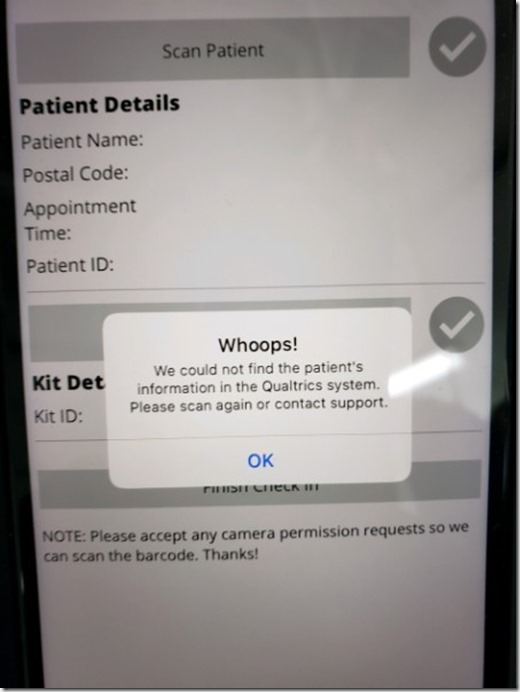

Day 1 was here, and real citizens were about to get tested. I slept well the night before, knowing we had tested thoroughly. We only had one hiccup: The QR code in the email was different than the QR code on the confirmation website. This was causing validation errors, as the website QR code’s patient ID couldn’t be found in the system. Not an app issue, but that doesn’t matter.

Figure: Ruh-roh! The QR codes weren’t matching between different sources. Yellow alert!

The survey provider quickly addressed the issue and we were good to go. It wasn’t a big deal – they provided a website to manually enter the patient ID for scanning with a properly generated QR code, and it barely impacted patients. Day 1 was a rousing success!

Figure: The app in use!

Wrapping Up

Going from no-app to app being used with patients in less than one week was an incredible experience. It feels great to help the community during this period of uncertainty. I’m grateful our city wanted to make the process as seamless as possible, using technology to help things go right, and providing the opportunity me to assist. I’m thankful that, once again, Xamarin was a great solution.

I’ll probably have a technology walk-through in the near future – I didn’t want to concentrate on the underpinnings of the application for this article. I’ll leave that to a discussion for the Indy Xamarin Meetup.

Figure: The final app, with my info scanned in.

Figure: The final app, with my info scanned in.

Figure: The final app, with my info scanned in.

Figure: The final app, with my info scanned in.